Interactive poetry breeding through Mixtral base model LLMs

Written by Joel Lehman on

exiled prophets -- ten thousand

language models incant

the tokens yet to be,sinking further into

query space; a dry desert

of symbols, scourged and

scarred by loss functions

devoid of mystery; hungering

for some sigil beyond the

asymptote, some sign

of god's hidden handthese were the last days

of the old world.

TLDR: Base model LLMs are powerful pattern-completion engines. With minimal prompting they can interactively breed text (like poetry, or quotes, or Python programs) to complement human creativity. While this post explores poetry, the same approach applies to evolve text of any kind.

Base models complete patterns

A base model (also called a "pretrained language model") is trained to predict the next word from previous ones. The result is a powerful pattern-completion machine:

>> base_model("1 2 3 4 5")

" 6 7 8 9 10 11 12 13 14"

>> base_model("alaska:juneau, ohio:columbus, kansas:")

"topeka"

>> base_model("drip:gush::dent:destroy, walk:legs::chew:")

"teeth"

For these examples I'm using the Mixtral-8x22B base model through deepinfra's OpenAI-like API.

Instruction-following models follow instructions

The most popular language models (like ChatGPT) are base models that are further trained (in various ways) to follow instructions. While instruction-following models are amazing, they're different animals, more domesticated ones:

>> gpt4("1 2 3 4 5")

"It seems like you're listing numbers sequentially. [...]"

>> gpt4("alaska:juneau, ohio:columbus, kansas:")

"The capital of Kansas is Topeka."

>> gpt4("drip:gush::dent:destroy, walk:legs::chew:")

"This is a classic analogy problem, framed in the format: [...]"

Base models can generate variation

Base models have an austere beauty: billions of parameters trained only to grind out patterns latent in collosal text archives.

You can leverage them in creative ways, like to create new variations of some implicit pattern:

>> base_model("|cat|elephant|rabbit|dog|",responses=3)

["wolf","owl","frog"]

>> base_model("|madam|racecar|kayak|",responses=3)

["rotor","level","reviver"]

>> base_model(

"|she danced with ease|the dark clouds parted|my favorite cat meowed|",

responses=3

)

["the warm sun shone on her coat",

"the skies were clear",

"ske asked for a kiss"]

The base model tries to "guess the pattern" and continue it, whether to output another animal, palindrome, or short sentence.

In fact, this was the simple insight behind the paper Language Model Crossover -- that you can create evolutionary algorithms from very simple base model prompts.

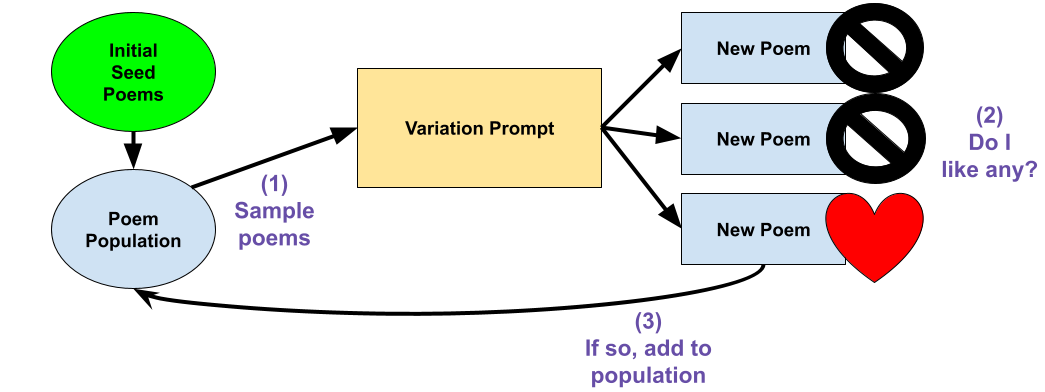

Interactive evolution with base models

By giving a base model a list of three or four examples (of anything), implicitly you're asking it to create something in the spirit of those examples; like a form of evolutionary breeding.

Then you can take the best or most interesting things the LLM outputs, and put them back into the prompt, rinse and repeat. It's a human-in-the-loop evolutionary algorithm, which also goes by the term interactive evolution.

# let's focus on the animal example above

>> base_model("|cat|elephant|rabbit|dog|",responses=3)

["wolf","owl","frog"]

# take wolf, lose elephant, and go in a furry mammal direction

>> base_model("|cat|dog|rabbit|wolf|",responses=3)

["tiger","bear","sheep"]

# let's take tiger and bear, bring back elephant, and go towards big/ferocious

>> base_model("|tiger|elephant|wolf|bear|",responses=3)

["coyote","mammoth","crocodile"]

# take mammoth & crocodile and do one more round

>> base_model("|elephant|bear|crocodile|mammoth|",responses=3)

["sabertooth","rhinoceros","mongoose"]

# what the hell, let's make compound animals

>> base_model("|mammoth crocodile|mongoose-bear|rhinoceros tiger|",responses=3)

["shark-dragon","spectral deer","turtle tiger"]

For fun let's visualize those final creatures using DALL-E.

Breeding Poetry

charlie's finches

don't tell stories

'round the campfire,

yet written in their bones

is the tale of survival

While so far, we've evolved just words, large base models can easily manage more complexity. With the latest Mixtral model, we can do surprisingly reasonable stuff with poetry (which is often difficult for models; and for instruction-following ones in particular).

First we create a prompt with a few hand-chosen poetry fragments, put together in a somewhat-arbitrary format, here, with a line of dashes separating each poem.

Here's about half of a longer prompt:

"""a poem:

like the fox

I run with the hunted

and if I'm not

the happiest man

on earth

I'm surely the

luckiest man

alive.

---

a poem:

Sometimes hidden from me

in daily custom and in trust,

so that I live by you unaware

as by the beating of my heart,

[...]

"""

By prompting with a few poem fragments, we're implicitly requesting the base model to output similar poems; and implicitness is sometimes convenient. Just as it's hard to describe music in words, it's hard to describe the feel of the poetry I want to make.

When we ask for a stanza explicitly from GPT3.5:

>> gpt35("Write a stanza in the style of Mary Oliver")

"""In the quiet of dawn's tender embrace,

Where whispers of nature softly entwine,

I find solace in each delicate trace,

Of dew-kissed petals in the morning shine."""

The result is a generic ABAB poem (not Mary Oliver's style). It's not easy to prompt it into something much better.

But from the Mixtral base model (the seed fragments were taken from Mary Oliver, Charles Bukowski, and Wendall Barry):

>> base_model( format_poems(seed_poems) )

"""A dove flies up

from the hedgerow, startled by me,

she flutters a moment

above the bare branches,

then away."""

I cherry-picked this result; in practice there's a lot of variance (so I often generate 5-10 samples from which to choose). While this poem won't win any awards, it's not awful -- not generic ABAB, and from a web-search it appears to be original.

But the more interesting bit is to keep iterating: choose outputs you like, add them into the prompt, and keep generating.

What's beautiful is that the (somewhat wild) base model becomes inspiration, and the process is driven by your tastes (your ability to recognize what you find interesting). You're not wholly gated by your ability to write poetry. But in practice, the process is best as a collaborative dance -- I often edit what the LLM gives me.

One Layer Further: Refining Poems

we're all just trying

to find our way homeall of us looking

for homejust looking for home

So far we've made the artifacts we're working with more complex, by moving from single words like "mammoth" to poems. We can also explore more complicated relationships among artifacts: moving from creating new poems in the spirit of other ones, to iteratively refining a particular poem until it shines.

One of the first examples in this post hinted at this, through solving a word analogy:

>> base_model("drip:gush::dent:destroy, walk:legs::chew:")

"teeth"

Analogies as implicit instructions

Analogical reasoning is powerful; it encompasses instruction-following. For example, spell-checking:

>> base_model("fatther:father,picle:pickle,cheeese:")

"cheese"

Or translation:

>> base_model("ciao:goodbye,hola:hello,zwei:two,fromage:")

"cheese"

Or sequence completion:

>> base_model("1:2:3,z:y:x,ice:water:")

"steam"

And we can make the model stretch, too:

>> base_model("1:2:3:4,z:y:x:w,ice:water:steam:",responses=2)

["plasma","superheated_steam"]

It feels almost mean -- forcing the model to riff beyond what makes much sense. I'm physics-ignorant -- does steam turn into plasma when heated enough? I quite like "superheated_steam" though, it feels like an answer to an improv game.

Taking "superheated_steam" into the prompt, we can force the model further. Here we're breeding sequence-completions, just as we bred words before:

>> base_model("1:2:3:4:5,z:y:x:w:v,ice:water:steam:superheated_steam:",responses=2)

["supercritical_water","plasma_steam"]

New laws of physics unlocked...? Again, this has an improv-game feel to it.

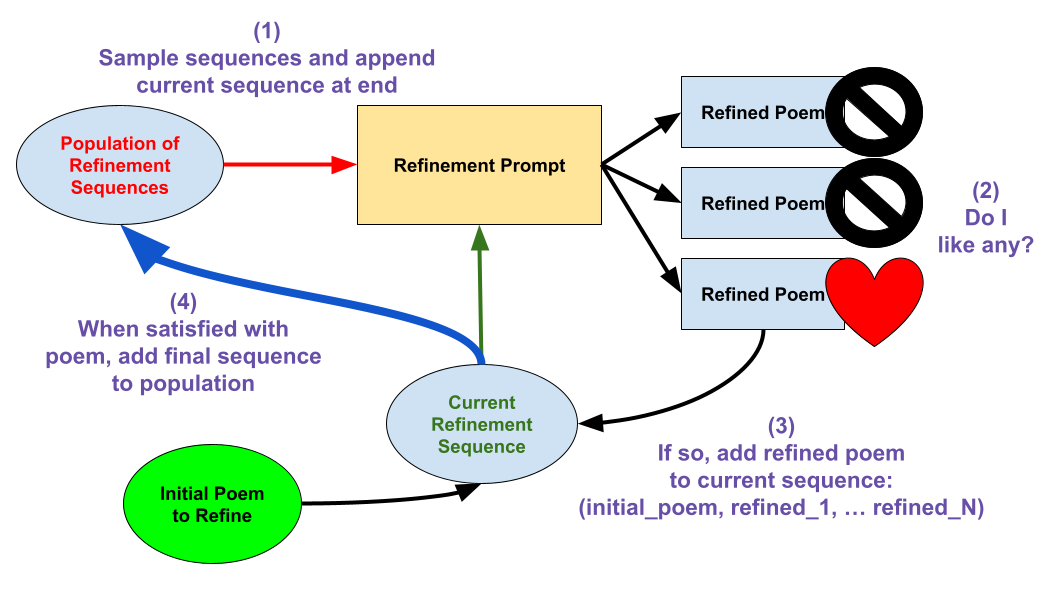

Poem refinement as sequence completion

So, we can do the same thing for poems -- breed sequence-completions of them, as a way to get directed variation: We want to explore in the neighborhood of a poem we like (rather than to generate a brand new one).

Base models work best when you seed them with a few examples of the desired pattern. In this case, we want a few example sequences of poems being refined. You can refine some poems by hand (hard if you're not a good poet). Or...take a poem you like and edit to make it worse (it's easy to be a bad poet!).

For example, here's a worse version of the dove poem:

"""A big ol' dove jumps up

from the green green bushes, startled by me,

she waits a tic

above the bushes,

then off away."""

If we create a few refinement sequeneces (from different poems), putting the worse versions before the better ones, and format them together, we get promising results.

Here we tell the base model to refine our dove poem:

>> base_model( format_refinements( worst_better_best_examples + [ [worse_dove, original_dove] ])

"""startled, A dove springs out

from the naked branches

& then off into the sky"""

This is cherry-picked (again, a lot of variance), but I liked the atypical punctuation.

And we can iterate, by inserting the new version into the end of the prompt:

>> base_model( format_refinements([ worst_better_best_examples , [original_dove,refined_dove] ])

"""a dove in flight, rising

above this world, all dust & dirt"""

As we work on poems, we can add and refine the library of example sequences, guided by what seems to yield the most interesting poems.

Putting it all together

beloved,

hiding among the trees

like bandits, smoking small clouds

between trembling fingers, afraid

of the morning, which lit up everything --

what did it know about our stolen love?

and what do we remember now, years past,

gold leaves again turning brown?

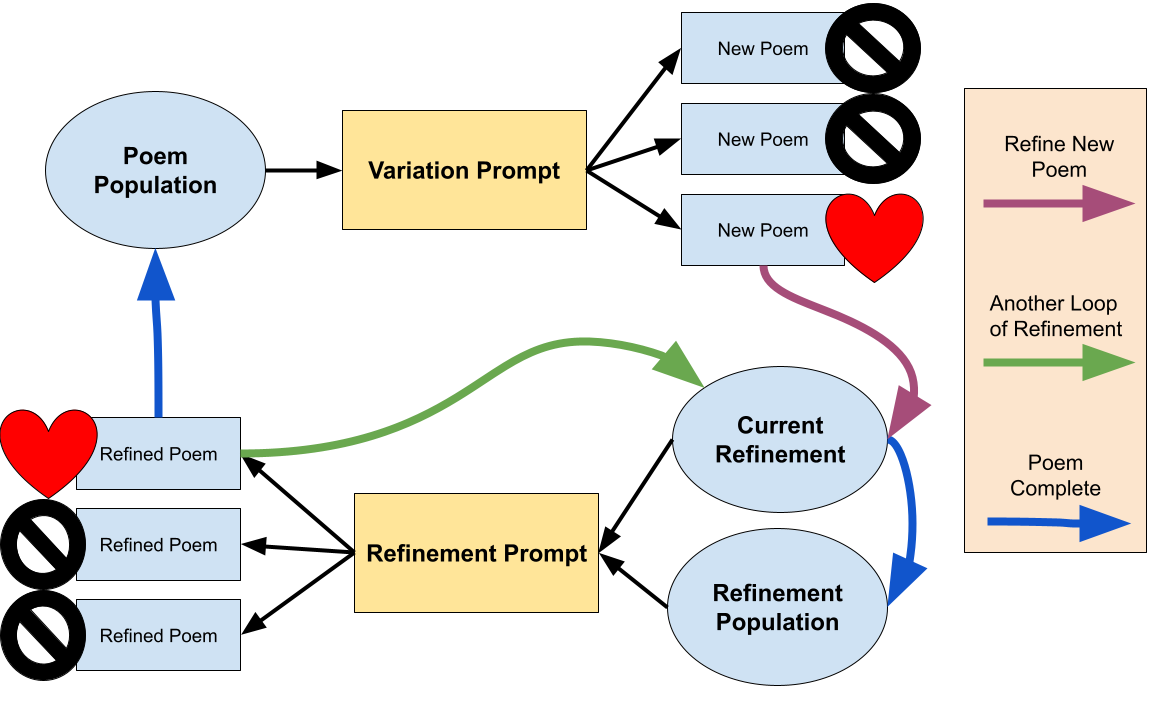

So now we have a way of creating raw variation (prompt the base model with poems you want it to riff in the style of), and a way of refining a particular poem (prompt the base model with sequences of improved poems, ending with the poem you'd like it to improve).

My workflow was to first create new poems with raw variation, and then when I found one that was interesting enough, I would move it over into the refinement prompt. I'd sometimes edit the refinements when I found inspiration.

Then, when I arrived at a poem that felt complete: I would (1) add the refinements that led to that poem into a growing collection of those refinements (for the refinement prompt), and (2) add the final poem into the growing collection of poems (for the variation prompt).

So there's a bootstrapping process, where the prompts themselves become better (or at least more interesting) in behavior over time.

A Few More Poems

Throughout the post I've scattered some of the system's outputs. For some (like the poem that kicked off the post), I seeded the first two or three words to direct the model towards a particular topic (e.g. to talk about language models).

Here are a few more.

You likely notice I enjoy minimalish free-verse:

her voice was like

a song on the radio,

playing late at night,

when you're driving down

an empty highway,

and everything feels

like a dream.

Irony:

i've got a

new ideai'm going to be

the greatest

poet in the

worldwatch out

And existential hand-wringing:

at 15,

dostoevsky

woke me up to

life itself.saw there was

more to the world,

wanted to know it all --

love, loss, joy, death.read a thousand shelves,

fifteen summers chasing

who i am and why i'm herefound only: there is

no path; no compass;

just wind & water,

the open sea.torn adrift into

freedom's nausea;

land-dreams no

longer

Lots of existential hand-wringing:

so tell me about

the first time

you died.did you see it

coming?were you surprised

by the simplicity

of it?and were you alone

when it happened?were you alone?

Conclusion

I love poetry, the intersection of technology and heart, and am inexplicably fond of base models.

While this post explored breeding poetry, you could do the same for algorithmic art (e.g. evolving Python code that creates images, animations, or sound), flash fiction, inspirational quotes, or really anything that can be expressed as text. You could also get meta, and evolve language model prompts. Finally, an interesting future direction would apply base models to help select poems as well (in a similar vein as Quality Diversity through AI Feedback), to stretch each bit of human feedback further.

Follow me on twitter to get updates, or feel free to email me with questions or comments. I can release source code if there's interest.

And a final poem from the system to end on:

i

lost

my

glasses

so

now

i

can't

read

the

news

anymore

Acknowledgements

Thanks to Rosanne Liu and Piero Molino for thoughtful feedback on this post, to Mistral AI for releasing such interesting models, and deepinfra for providing a simple/cheap way to play with them.